|

Hooman Ramezani I'm Hooman, a machine learning engineer passionate about applications of AI. Currently I work as an AI Specialist Solutions Architect at Nebius (neo-cloud) where I'm incolved in optimizing large-cluster LLM training/inference on H100/B200/GB200 GPUs. This includes leading performance research to unblock customers running large scale training, raise MFU, and stand up large GPU clusters. I have completed my BASc in Systems Design Engineering at the University of Waterloo, and my MASc at the University of Toronto with my thesis related to application of transformers for clinical workloads, including LLM and ViT models with multimodal data for lung cancer treatment. I’m skilled in ML infrastructure and platform engineering, including large-scale LLM training on GPU clusters, model compression (quantization, pruning, knowledge distillation), low-latency inference deployment with Triton and TensorRT, and building robust end-to-end MLOps and data pipelines. In my free time you can find me playing guitar. See more here. |

|

Research and ProjectsI am interested in Health AI, Machine Learning, Computer Vision. My research expierience spans previous work with the UW VIP Lab, various internships, and my Masters at UofT. |

|

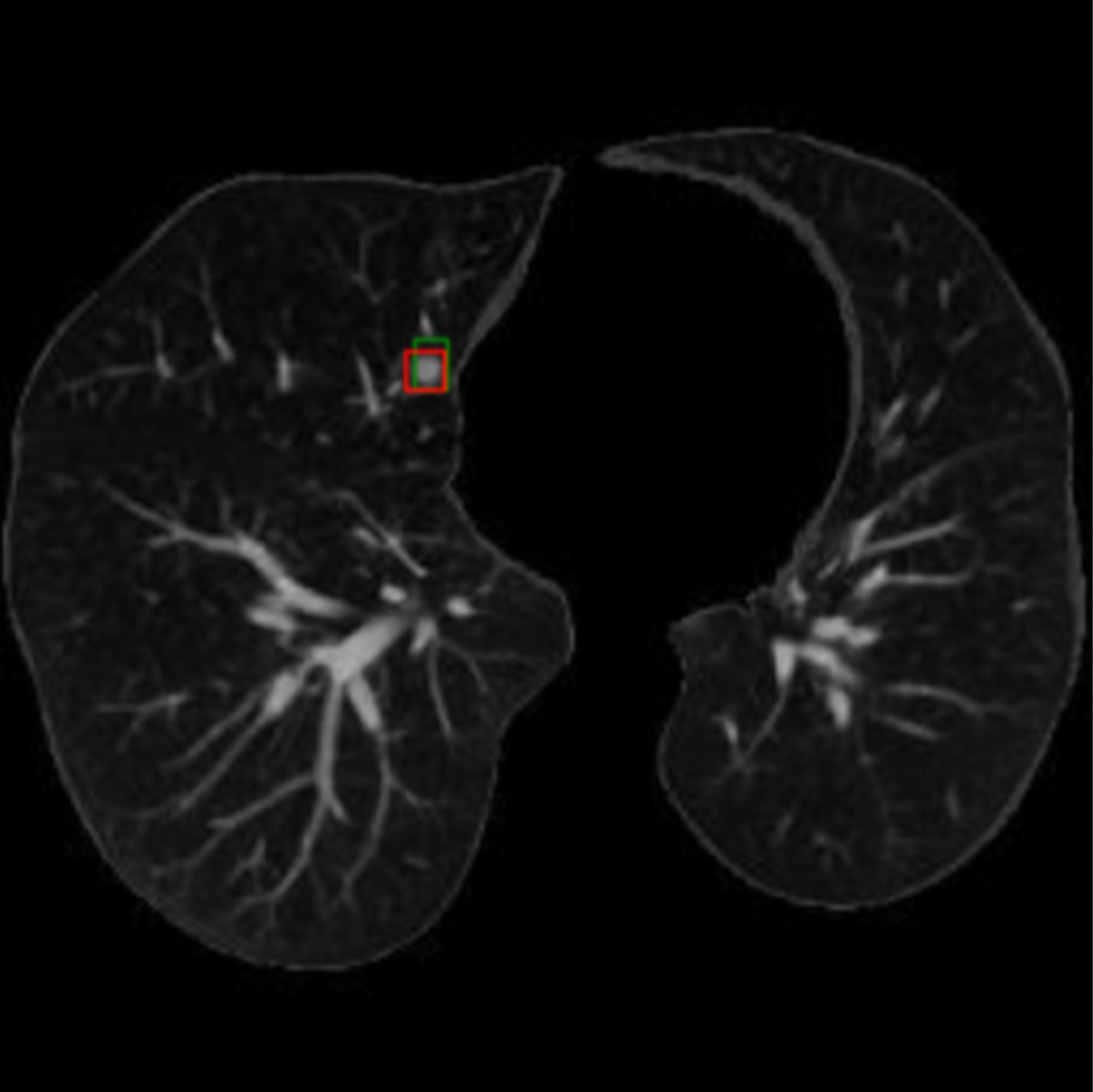

LN-Transformer: Lung Nodule Transformer for Sparse CT Segmentation

Hooman Ramezani, Dionne Aleman, Daniel Letourneau, arXiv, 2025 Paper Published a novel two-stage transformer for lung nodules segmentation featured in CVPRW, Strongest model on benchmark dataset Dice 91.4%, F1 94.2%, includes Meta SAM, DETR architectures. |

|

Lung-DETR: Deformable Detection Transformer for Sparse Lung Nodule Anomaly Detection

Hooman Ramezani, Dionne Aleman, Daniel Letourneau, arXiv, 2024 arXiv A novel architecture based to detect lung tumor, specifically designed to mitigate extreme class imbalance and find tumors among vastly health tissue. |

|

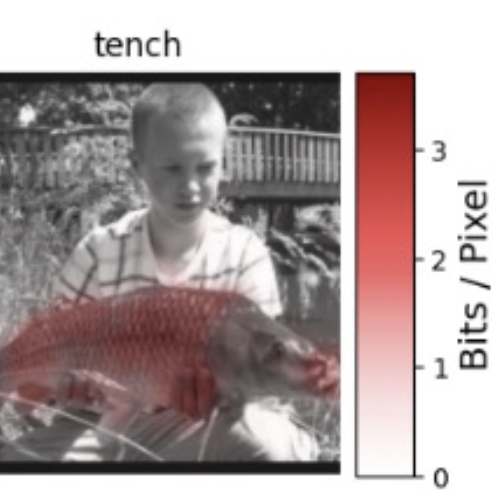

Enhancing DL Interpretability: IBA for Transformer Attribution

Hooman Ramezani, University of Toronto , 2024 Paper / Presentation Information Bottleneck Attribution (IBA) leverages principles from information theory to identify critical information in neural networks for decision-making attribution. In this work IBA is successfully applied to CNN and Transformer models, enabling a detailed analysis of model decision-making. |

|

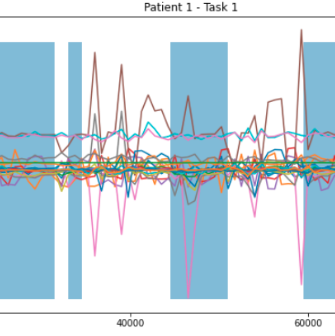

Parkinsons Freezing of Gait Detection

Hooman Ramezani, Medical Time Series Deep Learning, 2023 Paper / Github A deep learning network for time-series analysis designed to identify gait freezing in patients with Parkinson's disease, utilizing biometric signals for the prevention of falls. |

|

Rat-Brain-Inspired Reinforcement Learning for Optimal Pathfinding in Mazes

Hooman Ramezani, Computational Neuroscience, 2023 Paper / Github A deep reinforcement learning model inspired by the basal ganglia of mouse brains, designed to master maze navigation using Q-learning. It showcases the intricacies of decision-making and learning as the model identifies optimal paths through mazes. |

|

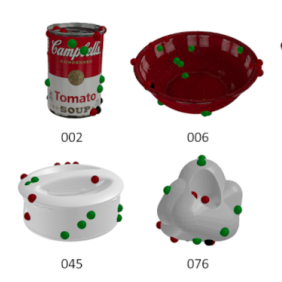

Grasp-Proposition-Net: Robotic Vision For Grasping Everyday Objects

Hooman Ramezani, UW VIP Lab , 2022 Github Developed a 3D computer vision model with VIP-Lab and Festo for a robotic arm, designed to determine optimal grasp points using LiDAR camera data. |

|

Drone-Aided Surface Defect Detection

Hooman Ramezani, Vison Model with Temporal Context, 2021 Github A highly accurate embedded model for classifying surface defects via drones, utilizing a convolutional-RNN architecture and synthetic data generation. Model is optimized for on-device execution in real-world applications. |